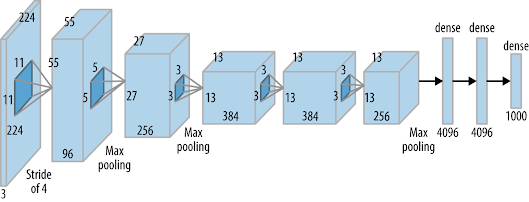

AlexNet (Convolutional Neural Network)

AlexNet Convolutional Neural Network) Paper Review

If you are interested in computer vision tasks using Artificial Intelligence, you may hear about AlexNet which surprised the world in 2012 at the LSVRC (Large Scale Visual Recognition Challenge) competition.

What is AlexNet?

AlexNet is a Convolutional Neural Network that was primarily designed by Alex Krizhevsky. It was published with his doctoral advisor Geoffrey Hinton.

The first one is ReLU Nonlinearity. Instead of the tanh function, AlexNet uses Rectified Linear Units (ReLU) that enable the model to reach the targeting performance faster than a model using the tanh function.

Secondly, AlexNet was trained on multiple GPUs. This was possible because AlexNet put half of the model’s neurons on one GPU and the other half on another GPU. Not only does this mean that a bigger model can be trained, but it also cuts down on the training time.

Lastly, it uses Overlapping Pooling. Normally, CNN models pool outputs of neighboring groups of neurons with no overlapping; however, this new approach reduced error by about 0.5% and found that models with overlapping pooling generally find it harder to overfit.

When a model gets larger, it gets prone to an overfitting problem. This was not an exception to AlexNet.

In order to resolve the overfitting problem, AlexNet uses "Dropout" and "Data Augmentation".

This technique is basically turning off neurons with a predetermined probability. The neurons which are “dropped out” in this way do not contribute to the forward pass and do not participate in backpropagation.

Every iteration uses a different sample of the model’s parameters, which forces each neuron to have more robust features that can be used with other random neurons.

So every time an input is presented, the neural network samples a different architecture.

However, dropout also increases the training time needed for the model’s convergence.

* Data Augmentation :

AlexNet generated image translations and horizontal reflections, which increased the training set by 2048.

They also performed Principle Component Analysis (PCA) on the RGB pixel values to change RGB channels' intensities, which reduced the top-1 error rate by more than 1%.

******************************************************************************

Pros of AlexNet

AlexNet is considered the milestone of CNN for image classification.

Many methods, such as the conv+pooling design, dropout, GPU, parallel computing, ReLU, are still the industrial standard for computer vision.

Cons of AlexNet

AlexNet is NOT deep enough compared to the later model, such as VGGNet, GoogLENet, and ResNet.

The use of large convolution filters (5*5) is not encouraged shortly after that.

******************************************************************************

References:

- https://medium.com/analytics-vidhya/concept-of-alexnet-convolutional-neural-network-6e73b4f9ee30

- https://towardsdatascience.com/alexnet-the-architecture-that-challenged-cnns-e406d5297951

- https://www.mygreatlearning.com/blog/alexnet-the-first-cnn-to-win-image-net/

Comments

Post a Comment