What is ResNet (Residual Network)?

Today I want to talk about the Residual Network (a.k.a ResNet)

ResNet was first introduced in the paper "Deep Residual Learning for Image Recognition" by Microsoft Research Team.

The idea of ResNet was to solve a problem with a typical CNN network.

The CNN network shows amazing performance in a computer vision Deep Learning Network; however, there is a limitation with using CNN.

Researchers had been tried to create a deep CNN network such as Very Deep Convolutional Networks (VGG).

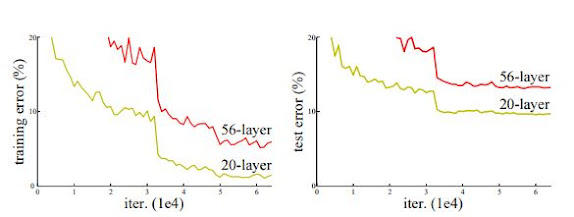

Normally, the consequences of these Networks were great except for the fact that as the network gets deeper, ironically the performance of the network decreases as the paper says "When deeper networks can start converging, a degradation problem has been exposed: with the network depth increasing, accuracy gets saturated (which might be unsurprising) and then degrades rapidly"

This problem is due to the "Vanishing Gradient" that in the deep neural network, it is hard for the model to pass down the losses to the front side of the network.

This is because the gradient continuously gets multiplied by the derivative of the activation function (such as sigmoid)and it gets smaller and smaller until it reaches 0.

There are a few different ways to resolve this problem by using RELU as the activation function or using Batch Normalization between layers.

Despite the above techniques, I believe ResNet is the best way to resolve the "Vanishing Gradient" problem.

Now I want to talk about what ResNet is and why it is special.

The basic idea of the deep residual learning framework is simple.

It is just adding or using the previously learned information from previous neural network layers.

In this way, the model will not forget what it learned in the past and the performance of the model will not decrease even though the network gets deeper.

Also, each block or layer of the network only has to care about the new information they have to learn, instead of taking all the information into account for learning or updating the weights.

The paper says the desired underlying mapping is H(x), but created another mapping F(x):= H(x)−x. The original mapping H(x) is recast into F(x)+x.

This idea was due to the assumption that it would be "easier to optimize the residual mapping than to optimize the original, unreferenced mapping".

When it adds the information, it simply performs identity mapping and added to the outputs of layers or blocks.

One interesting fact is that the "identity shortcut connections add neither extra parameter nor computational complexity".

The paper shows that:

1) Extremely deep residual nets are easy to optimize, but the counterpart “plain” nets (that simply stack layers) exhibit higher training error when the depth increases;

2) Deep residual nets can easily enjoy accuracy gains from greatly increased depth, producing results substantially better than previous networks.

Comments

Post a Comment